If you're anything like me, the mere mention of the word "grade" has a tendency to raise your blood pressure. Our students often assume they are the only ones who worry about grades, but if my experience is any indication, anxiety about grades is also a central feature of faculty life. I admit that this is somewhat strange. We are, after all, the ones who are in control of the grade. And for the most part, we have complete freedom in setting the standards for determining those grades. So what is there to be anxious about?

For starters, we worry about the meaning of our grades. We spend a lot of time thinking about what our grades should mean (should they be a measure of performance, competency, growth, or effort?) But we also worry about whether our grades have meaning. And by that I mean we worry that, whatever we've decided about the meaning of our grades, our practices don't clearly communicate that meaning to those who receive them (whether students or the outside world). There is nothing more demoralizing than the thought that the countless hours we spend grading might be dismissed as meaningless. And this is why some of us get upset about grade inflation (or, more specifically, grade compression), why others get upset when faculty teaching similar courses have varying standards, and why all of us get upset when we learn that some of our students think our grades are communicating arbitrary preferences. Each of these cases reveals that grading, as with all communication, is a collective act. And as the collective meaning of our practices is eroded, we are right to worry that the time we spend working to communicate in this language is wasted effort.

We also worry about whether our grading practices are moral. This is just a fancy, helps-my-alliterative-title way of saying that we work hard to ensure that our grading practices are fair to our students. And many of us--particularly those of us (me!) whose area of expertise is justice--are in a near constant state of worry that they might not be. There is certainly a good amount of disagreement about what constitutes fairness in this domain (and for those who are interested in this question, I highly recommend Daryl Close's "Fair Grades" in Teaching Philosophy), but few of us would admit to having no principle of fairness guiding our grading practices. Although some of the anger about grade compression and variable standards is tied to the meaning of grades, my experience has been that most of the anger is actually about justice. When we learn that students in other classes are getting higher grades for lower quality work, we are rightfully angry that the system (and particularly the "grade inflators") have treated our students unfairly. The basic idea here is that most of us work hard to create a system of grading that ensures students in similar circumstances will be treated similarly. This work can be as simple as blind grading of individual assignments or as complicated as campaigning for consistent grading standards across departments, campuses, or even institutions.

Finally, we are continually anxious about how we might manage the significant workload of grading when we have approximately 749 other tasks to complete within any given week. And while one might think this concern is most pressing for faculty at research institutions, the trade-off is significant at teaching institutions, as well. For every hour we spend grading is one fewer hour we spend planning our classes, designing assignments, and working individually with students. And who among us is proud of the work we do in the classroom when we're operating on three hours of sleep after a late-night grading session scrambling to get assignments back to students in a reasonable amount of time? When we end up in these situations, we know we have a problem, but we're often lost at sea when it comes time to create a manageable system.

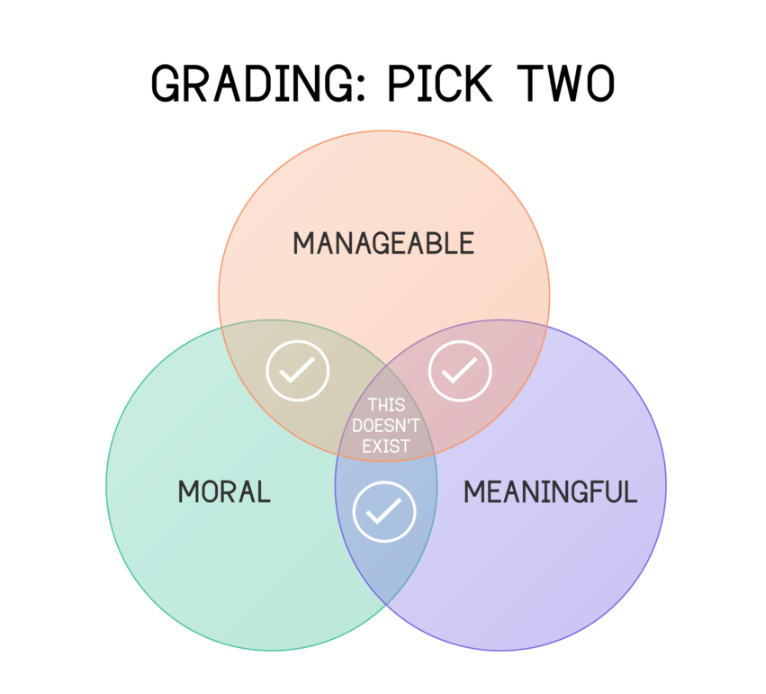

When I first began teaching, I assumed my anxiety in each of these domains would eventually dissipate. I was certain that there had to be an approach to grading that was simultaneously meaningful, moral, and manageable, and that, with enough time and experimentation, I would eventually discover it. Yet the more I tried to get a handle on anxiety in one domain, the more I seemed to increase my anxiety in another. [1] I came to believe that the system was stacked against us. It had trapped us into a corner where, at best, we could maximize two goals at the expense of the third. Mirroring the "fast, good, cheap" meme that designers love so much, my pessimistic grading meme might look something like this:

Things got even more depressing when my work for the CTE introduced me to the empirical research on grading reliability and the consequences of the current system for our students and their learning. I began to long for a job at one of the many institutions that has abandoned traditional grading altogether. Failing that, I took Jim Lang's advice about false hopes for grading to heart: "grading is complicated, and it may be the least appealing activity you will undertake as a teacher," he warns. "But it still has to be done."

This all changed in December of 2014 when I stumbled upon an article by Linda Nilson about something called "specifications grading"--an approach that seemed just radical enough to reset the traditional dynamics.[2] I wasn't sure whether or how it would work, but in the spring of 2015, I threw out my traditional script and made yet another attempt to reimagine my grading.

Hope springs eternal.

So what is it that makes this system of grading so radical? Without going into all possible details, I'll just summarize here what I take to be its three most important features.

- Grades are entirely (that is ONLY) a reflection of the extent to which the student has mastered the learning outcomes of your course, and the outcomes (as well as standards of mastery for each) are "specified" in advance.

- Grades on individual assignments are binary (is the outcome mastered or not?), so the grading of individual assignments does not admit of degrees. Your final grades can admit of degrees in one of two ways (or a combination of both). The continuum of grades can be assigned on the basis of how many of your learning outcomes students have mastered. Or, alternatively, semester grades can be assigned on the basis of how often your learning outcomes have been mastered in various assignments. So in the first case, the student who masters every outcome ends the semester with an A, the student who masters all but one ends the semester with an A-, etc. In the second case, the student who is able to consistently master a particular learning outcome gets a higher grade than the student who is only able to display mastery of that outcome half the time, etc.

- Finally, students in the same course are not required to complete the same set of assignments. Because the semester grade only reflects mastery, students are not penalized for missing assignments. Those who are able to master an outcome early need not complete further assignments designed to assess that outcome. And students who struggle with a particular outcome are able to complete more assignments until they achieve mastery (or the semester ends).

If this is still too abstract for you, it might be helpful to see how this works itself out in actual syllabi. Although I will be making many changes to the system I used in my first experiment, you can find the language I used in my syllabus here. I also recommend my colleague Caleb McDaniel's significantly more elegant version here. And for those in STEM who are not afraid to amp up the complexity, take a look at Robert Talbert's plan for his math classes here.

So what is it about this system that most appealed to me? First, and most selfishly, it seemed like it would save a lot of time. Instead of spending hours deciding where a single assignment fell on five to ten different 20--point spectrums (for each criteria in my rubric), I could design assignments to assess a single skill and simply make a single, up or down judgment after reading. And I wouldn't have to keep track of due dates, late penalties, or (hallelujah!) student excuses.

But unlike other timesaving systems, this approach seemed to make the process more, rather than less, fair. While I would still be relying on my gut to make quick decisions about assignments, the fact that this judgment would be binary and about a single skill meant that it was likely to be exponentially more reliable than other quick judgments I had made about the details of assignments in the past. Also, this system seemed more just in a global sense. Insofar as we think grades should be a reflection of end of the semester competency alone, traditional grading systems disadvantage those who do poorly at the beginning of the term and/or fail to comply with rules unrelated to learning.

Finally, this system seemed to produce a grade that was far more meaningful to students. Because you are required to specify your learning outcomes, as well as the specific standards for mastery, students know exactly what the goal is and whether or not they've achieved it. And if your semester grade is based on how many learning outcomes have been mastered, they have an even better sense of what they are and are not capable of doing at the end of the term. When students end the semester with a total number of "points" that reflect everything from attendance to extra credit, it's much harder for them to translate that into a clear sense of what they have and have not learned.

This all sounds great in theory, but did it actually work? Yes and no.

The grading was most certainly faster and less anxiety inducing, as I expected it would be. And once they figured out what I was doing, students appreciated the freedom of optional assignments, flexible due dates, and the ability to make numerous attempts. Top students were also especially happy to get out of assessments of skills they had already mastered; they never felt like they were doing busy work and had more time and energy to focus on building advanced skills. Another unintended benefit of this approach was that I didn't have to waste my time grading assignments from students at the top and bottom. If students had mastered the skill in the past, I didn't have to keep reading their work. And if students were clearly not ready (or uninterested) in displaying mastery of a particular skill, they were unlikely to submit the assignment.

Despite this success, there were three features of my first experiment that didn't work so well.

First, it was immediately clear that the traditional trade-offs between fairness, meaningfulness, and manageability were not entirely absent from specs grading. I still think the most manageable versions of specs grading are likely to produce grades that are orders of magnitude more fair and meaningful than the most punishing systems of traditional grading. But that doesn't mean specs grading won't tempt you to make your grading ever more fair and meaningful by increasing the complexity (and consequent work load).[3] I gave in to this temptation when making decisions about the number and types of outcomes I was going to assess, the number and types of assignments I offered, and the system I used to translate those attempts into an end-of-semester mastery grade.[4] The intricate system made sense in the abstract, but it led to some confusion among students and made things more difficult for me in the long run.

The second issue I encountered was likely unique to Rice but potentially instructive for all. When I designed my system, I spent a great deal of time thinking about which requirements would lead to a fair and meaningful distribution of As, Bs, and Cs at the end of the semester. Yet I completely forgot that my students could opt to take the course pass/fail up until the 10th week of classes (and that this was quite common in general education courses at Rice). This had a number of unexpected consequences, but the most important was that students who were not taking the class for a grade turned in very few assignments. Because specs grading does not require completion of any specific assignments, my pass/fail students only needed to complete the number of assignments necessary to display mastery of enough outcomes to pass the class. But because I spent so much time thinking about what was required to earn As, Bs, and Cs, I failed to ensure the requirements for a passing grade were rigorous enough.

Finally, my experiment revealed that one of the greatest strengths of specs grading--the autonomy granted to students to determine when and how they display mastery--was also its greatest risk. More specifically, I learned that if you are teaching at an institution where students are overcommitted and used to performing well on their first attempt, they are likely to opt out of the earliest assignments.[5] The problem with this practice was two-fold. It limited the amount of time students spent practicing the skills I wanted them to learn. But it also reintroduced (and amplified) the pressures of the traditional grading system when their final grade hinged on a single attempt to display mastery at the end of the semester. Specs grading is designed to reduce high-stakes assessment, but because it gives students a great deal of autonomy to control how they are assessed, there is a risk that they will reintroduce those stakes with their own (poor) decisions.

So, when all is said and done, what did I take away from my experiment?

First, it is important to keep your specifications system simple. Pick a few central outcomes, set limits on the number of assignments offered to students, determine the final grade on the basis of how many outcomes have been mastered (rather than how often or in which order), and assume that a single display of mastery is a representative sample (even if it might not be).

Second, if you want to provide guardrails for your students, it is important to modify Nilson's approach by requiring a number of ungraded practice assignments, as well as the completion of early assessments for each outcome. These revisions will give students a more realistic sense of their abilities early in the semester and (hopefully) prompt them to value consistent practice and feedback in each domain.

But finally, and most importantly, I learned that specifications grading has the potential to repair my fraught relationship with grades and grading. I would still prefer a system without letter grades, but specifications grading appears to be our best hope to challenge the system from within.

[1] To ensure that I could be confident that my grades were at least meaningful to my students, I spent hours designing detailed rubrics and providing detailed explanations of my grades for every student on every assignment. And to ensure that I could be confident that my grading standards were fairly applied, I took up John Bean's deeply humane (but also laughable, in retrospect) advice to grade every assignment three times (for the record, I think Bean's book is one of the best out there in terms of pragmatic advice for teachers; don't let this particular passage scare you away). When I realized this approach was pretty much the definitional opposite of manageable, I began to read everything I could about ways to streamline the grading process. I assigned more work I didn't actually grade, relied more heavily on peer-review, forced myself to write less by sitting on my hands as I read (seriously), set an egg timer for each assignment, reduced the number of criteria in my rubrics, and began to trust my gut intuitions more. These new strategies were certainly successful at giving me more time. But if I was being honest with myself, I also knew that my grades were more arbitrary than they had been in the past and that what they communicated to students was less meaningful. The grades were not entirely arbitrary and meaningless, of course, but I knew that I could be doing better on each of those fronts. So while my sleep increased to reasonable levels, my anxiety about the grading process remained relatively fixed.

[2] Nilson also published a book on the subject in 2014 and has since provided further summaries of the method in a variety of articles and interviews. As more faculty begin to experiment with the method, you can also find a variety of blogs chronicling experiences adapting the system to various disciplines. Of note here are Robert Talbert's reflections on his use of specs grading in his Computer Science and Math courses. It should also be noted that, as with many things in higher education, Linda is essentially borrowing and adapting practices that have been in use for quite some time among K-12 educators. In this case, "specifications grading" is a modified version of the "standards-based grading" systems proposed by Rick Stiggins and others.

[3] Robert Talbert explains this trade-off well here.

[4] I also added an additional degree of complexity to Nilson's original system by following McDaniel's practice of grading all assignments on a three point scale ("developing," "emerging," or "mastered"). I'm not sure the middle category was really meaningful to my students (or at least not more meaningful than my feedback alone would be) and it definitely slowed me down. So I don't plan to use it in the future, either.

[5] Because Rice students are able to switch to a pass/fail designation up until the end of the 10th week of classes, students were even more likely to put off early assignments. They figured they'd be able to display mastery on later assignments and, if they couldn't, they would just switch the course to pass/fail. My hunch is that part of the reason I had so many students take the course pass/fail was that the freedom of specs grading allowed them to get themselves into more trouble than they otherwise would have been in if I had required completion of early assignments.

Posted on February 16, 2016 by Elizabeth Barre.